Spice Up your Caching with Convoyr

Where it All Started

fetch

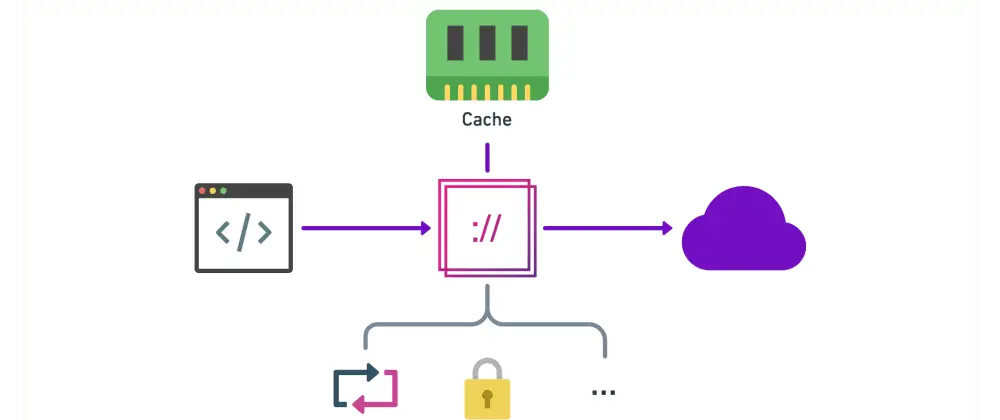

💡 The Idea Behind Convoyr

HttpClient

🐢 The Network Latency Performance Problem

🚒 Caching to the Rescue

🍺 The Freshness Problem

🤔 Why make a choice

Observable vs Promise

HTTPClientfetch

✌️ Emit both cached & network

@Component({

template: `{{ weather | json }}`

})

export class WeatherComponent {

weather: Weather;

...() {

this.http.get<Weather>('/weather/lyon')

.subscribe(weather => this.weather = weather);

}

}@Component({

template: `{{ weather$ | async | json }}`

})

export class WeatherComponent {

weather$ = this.http.get<Weather>('/weather/lyon');

}Convoyr cache plugin

npm install @convoyr/core @convoyr/angular @convoyr/plugin-cacheimport { ConvoyrModule } from '@convoyr/angular';

import { createCachePlugin } from '@convoyr/plugin-cache';

@NgModule({

imports: [

...

HttpClientModule,

ConvoyrModule.forRoot({

plugins: [createCachePlugin()],

}),

],

...

})

export class AppModule {}createCachePlugin({

addCacheMetadata: true

})http.get('/weather/lyon')

.subscribe(data => console.log(data));{

data: {

temperature: ...,

...

},

cacheMetadata: {

createdAt: '2020-01-01T00:00:00.000Z',

isFromCache: true

}

}import { and, matchOrigin, matchPath } from '@convoyr/core';

createCachePlugin({

shouldHandleRequest: and(

matchOrigin('marmicode.io'),

matchPath('/weather')

)

})createCachePlugin({

storage: new MemoryStorage({ maxSize: 2000 }), // 2000 requests

})createCachePlugin({

storage: new MemoryStorage({ maxSize: '2 mb' }), // 2MB

})